Remote Rhapsody: combine a smart speaker and virtual reality

I had few more days off that I had to take by the end of the year. At the end of 2020 with a partial lockdown in Germany means no travelling and not much socializing, so I decided to go for an online activity and try out something new.

Lately, I wanted to start something with AR/VR: an unknown field for me, that gives big promises, from “everything is possible within a virtual world” to a rapid market growth in the next years.

Also, Deutsche Telekom, together with Hubraum and BeMyApp announced a hackathon for their smart speaker: a fast follower of Google and Alexa that has its own virtual assistant AI called Magenta.

The hackathon was about writing a skill for the speaker and publishing it using their voice skill SDK: https://github.com/telekom/voice-skill-sdk

I was curious to attend but did not wanted to develop a skill that just tells random jokes: we have seen enough of them and we all know that they work 😊 I wanted to really challenge myself here (and my teammate) and combine the smart speaker with VR.

The idea is simple: let us build a virtual teacher for the children, that will engage and teach them, especially during lockdowns and school closings. That would enable children to:

- Answer questions, curated by the teachers

- Be in the same virtual room with their friends

- Make breaks with healthy exercises

The virtual teacher would use the Magenta smart speaker as an input / output device and the child will wear VR goggles for a seamless experience (or use any TV screen / Laptop or Tablet). The smart speaker will do the heavy lifting for text-to-speech and speech-to-text as well as the Natural Language Processing part.

Surprisingly, the whole hardware (speaker and VR goggles) for that is very affordable: just below 30 Euros: 21 Euros for the speaker and you can pick between or

for the VR part.

For the scope of the hackathon we implemented the following dialog:

Parent/Child: Hello Magenta, start the virtual teacher!

V teacher says: Hello Sara, what do you want to do today? Algebra or Geometry? I can also show you some warm up exercises.

Child says: Algebra!

V teacher says: Alright! What is the sum of 3 and 1?

Child says: This makes 4!

V teacher says: Very good. Then I have another question: how much is it if you subtract 3 from 7?

Child says: 2!

V teacher says: Unfortunately that’s not true. Let’s try again. What do you get if you subtract 3 from 7?

Child says: 4!

V teacher says: Very good. Then I have another question: How much does it makes if you subtract 12 from 34?

Child says: Change game!

V teacher says: Alright, let’s do some geometry. What is this figure? (shows a triangle)

Child says: triangle!

V teacher says: Very good. Let’s try one more! Do you know what is that figure?

Parents/Child: Exit game!

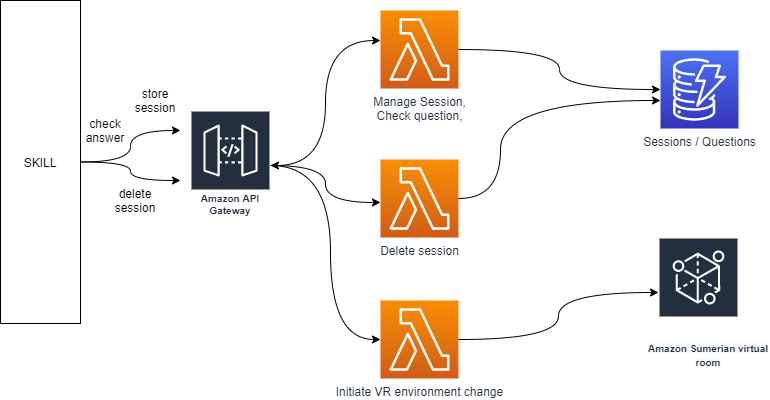

Implementing that conversation turned out to be not a trivial task: usually when you interact with a smart speaker you would ask something and the answer would end the conversation. As you can see from the example above, here we have a dialog where the Virtual Teacher proactively asks for input in an unguided conversation. We dealed with that by attaching a micro service to the skill, isolating the conversation logic in separate serverless functions and keeping state for the skill.

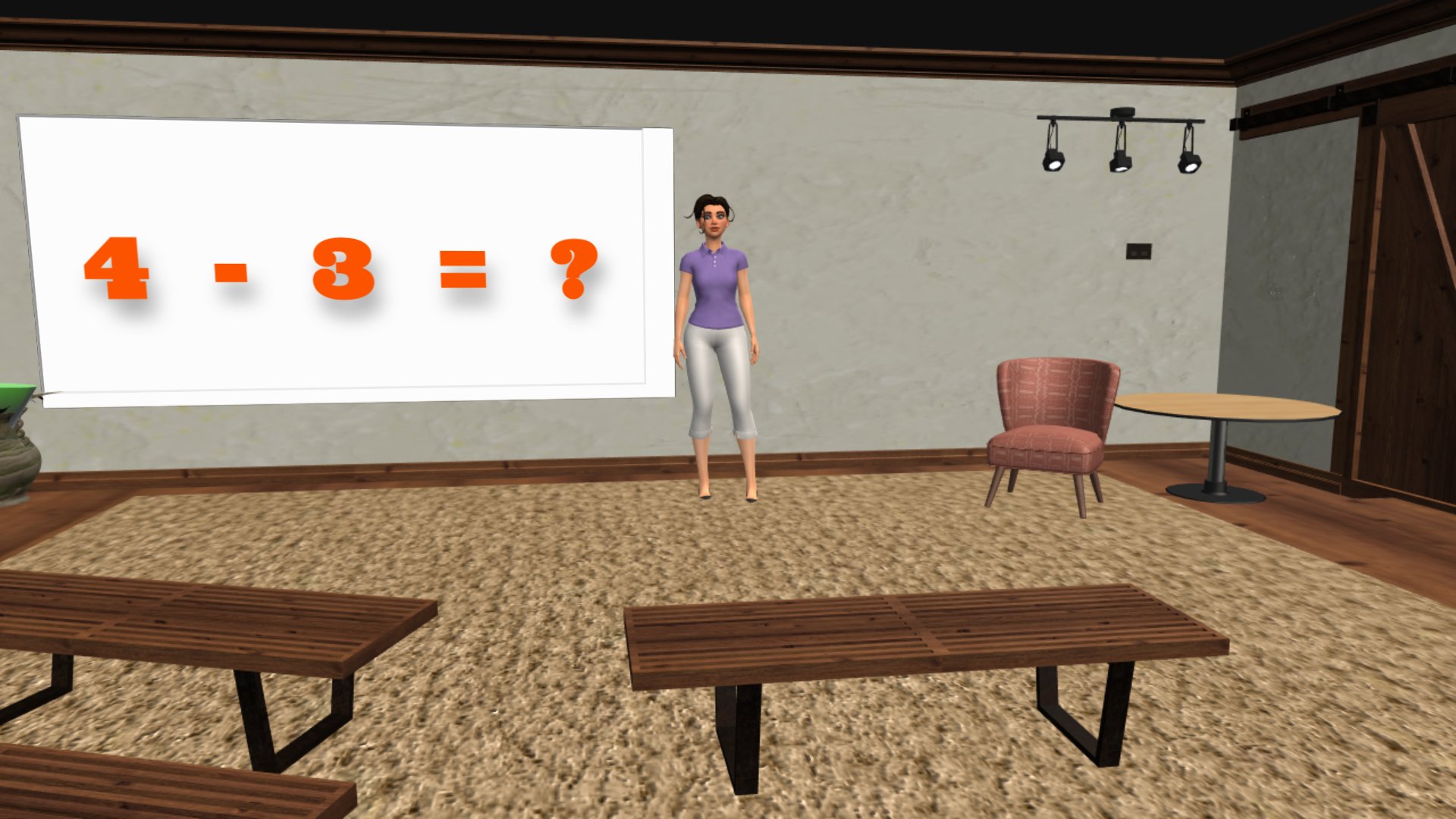

Further, we needed a basic virtual class room with a whiteboard and a virtual human. A week ago, I noticed that AWS has a service called Sumerian, for everyone who wants to build AR/VR apps with virtual people inside that have decent gestures and talking capabilities. While Sumerian comes with few ready assets, you can easily import more in .fbx and .obj format. So the virtual class room took few hours until fully ready for showcasing it. I highly recommend watching the Sumerian tutorials for a quick start here

The whiteboard in the virtual class room is an “3D HTML” element and can render “normal” HTML like a browser inside the virtual reality. So we also had to develop a basic web application and embed it in the whiteboard.

To create a skill for the Magenta smart speaker, one can simply use python which makes the process very easy for newcomers like us: just import some libraries, write a regex that starts your skill, host and deploy your skill in the Skill Development Portal and you can already have a conversation with your smart speaker.

Once we had all building blocks in place, we connected them with Lambda functions and APIs and our solution was ready:

To wrap up, this hackathon was a really nice experience where I learned a lot and I was able to experiment with two great technologies: smart speakers and VR and the endless usecases around them. The Magenta smart speaker and the skill development platform turned out to have a shallow learning curve and I can’t wait to see more skills for the speaker soon. Our solution won 3rd place and we both received some nice Christmas presents 🙂

Repositories:

- https://github.com/boriside/hackathon-magenta-voice

- https://github.com/boriside/virtual-teacher-web

- https://github.com/boriside/virtual-teacher-api

7

Very impressive 🙂